Nginx Web Server

Contents

About NGINX

It is an open source web server software designed for load balancing, reverse proxying, HTTP caching, and media streaming. It was designed for maximum stability and performance with low memory footprints. NGINX uses architecture that is more event driven, which requires less memory footprint than a traditional process driven one.

Goal behind developing NGINX:

Each connection by a client was handled as a separate thread or a process and with overtime increase in the traffic to the server, the connection rate increased, and the serving capacity was affected. In the backend, additional memory consumption was seen with process spawning that required switching the CPU to a new task from an old one, thus memory and CPU utilization both were high. NGINX was designed to achieve high performance, to give better utilization of the server hardware resource that can scale up with ever increasing need of web hosting..

Features of the NGINX:

- Capacity to handle more than 10,000 simultaneous connections with a low memory footprint.

- Handling of static files, index files, and auto-indexing.

- Reverse proxy with caching.

- Load balancing.

- It has FastCGI support with caching.

- IPv6-compatible.

NGINX is the most popular modular, event driven, asynchronous, single-threaded web servers. We will see what these all terms mean one by one.

In this article, you may have came across a term called event driven and would be wondering what is it? Well, it is a unique approach made to handle tasks as events. The incoming connection, the disk reads, etc are all events.. Operating systems intimates when the task is started and when it is completed. NGINX worker makes proper utilization of these resources, so the resources can be utilized and released on demand as a result you will see better usage of CPU and memory.. Each worker process of the NGINX has the capacity to serve thousands of requests and connections per second.. It does not create new process for every new connection instead the worker attends the new request from queue and executes runloop on it giving the worker the capacity to process multiple connections per worker..

The runloop that NGINX starts does not hang or get stuck on particular events, this is what we called as asynchronous.. It creates alarms from the system for particular event(s) and monitors the queue for those alarms.. Once it seems the alarm, NGINX runloop triggers the action. This is how NGINX utilizes the shared resources on the server in an efficient method.

Single threaded means a single worker can handle many user connections, which lowers the utilization of resources during context switching that requires CPU utilization.

NGINX uses event notifications and appoints specific tasks for separate processes altogether. Connections are efficiently operated in a run-loop for some limited single-threaded processes that are called as workers. Each NGINX worker can handle as many as thousands of concurrent requests and connections per second. The NGINX worker code is comprised of core and functional modules. The core is responsible to maintain proper runloop. Modules comprises of application layer functionality. The modular architecture facilitates the developers to extend the set of features for the web server, keeping the core untouched..

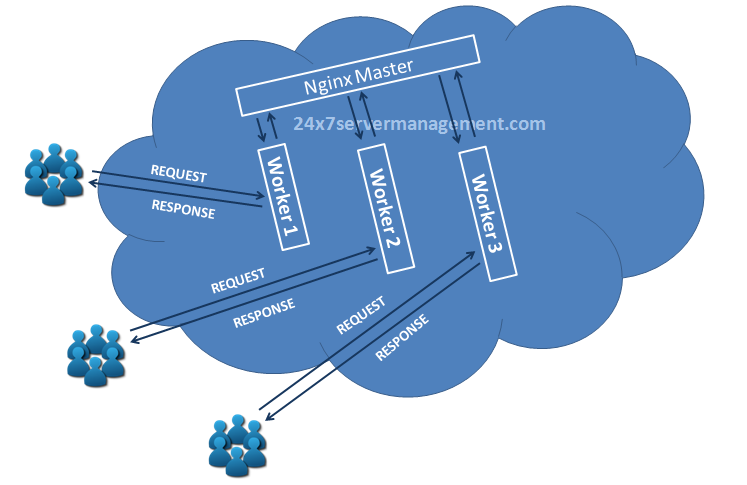

NGINX Architecture:

How Does NGINX Work?

NGINX uses a conventional process:

The master process performs the privileged task like port bindings, reading the configuration, and then it starts creating a small number of child processes like the below 3 types.

Cache loader: This process executes at start and loads the disk-based cache into the memory, and then closes. This scheduling saves the resources that it may require if it does not exit.

Cache manager: This process runs sporadically and reduces entries from the disk caches to maintain the configured sizes.

Worker processes: This is the process that performs all of the major work. It handles reads the disk, write to the disk, handles the network connections, and communicates with upstream the server if configured as revere proxy.

Summary:

Master:

- Monitors the workers.

- Handles all the signals and notifies it to the workers like reconfiguration or update, etc.

Worker:

- It responds to the client query.

- Get the commands from the master.

I hope this has been informative to you and I would like to thank you for reading..